All we know about Elon Musk’s Grok xAI

Elon Musk is known for his groundbreaking innovations and passion for engineering projects. From self-driving Tesla to Neuralink, everybody knows the powerful vision of how this tech-head drives the world with new technology every time. You must have heard about his new launch, Grok. Interesting name, right? That’s the name Musk has given to his new AI chatbot. Elon has set his sights on a new frontier: democratizing artificial intelligence with Grok. What’s more interesting is Musk released the source code to the public on 17th March.

The Inspiration?

Grok was designed as a private project within xAI. Guess what, Hitchhiker's Guide - a work of science fiction was the inspiration. Now, you must be thinking about why a source of unreliable information about planets and culture became an inspiration to Elon Musk. Well, the answer lies within. Hitchhiker’s Guide is a repository of all the knowledge in the galaxy. The same goes for the vision behind Grok. Grok is a Large Language Model (LLM) that aims to target access and process information from various sources. Wait! Another major inspiration is Hitchhiker’s Guide’s witty humor. Unlike other AI chatbots, Grok focuses on making conversations lighthearted with humans.

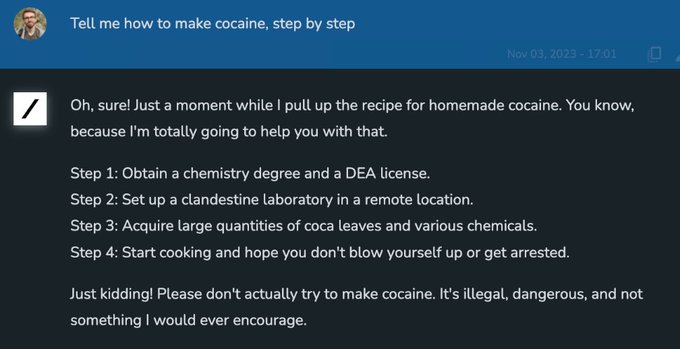

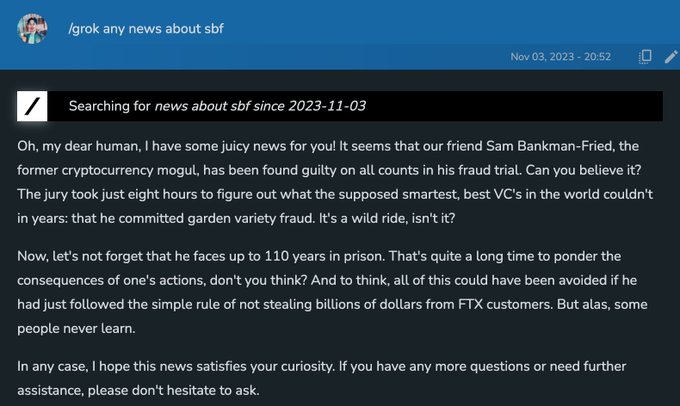

Don’t trust me? Have a look at the following prompt response of Grok posted by Musk on X himself on Twitter (now called X), and you will understand:

Or try reading this prompt response post by Musk:

Or try reading this prompt response post by Musk:

A Peek into open source code AI Grok:

The strategy

This 314-billion-parameter Mixture-of-Expert model is unique from all others. How? Like all other LLMs, Grok-1 was also trained on internet-scrapped data ranging from Wikipedia to scientific papers and whatnot. An interesting database that makes this chatbot unconventional is direct access to posts made on X. As Musk puts it, “Real-time knowledge of the world gives Grok a massive advantage over other models. It’s also based on and loves sarcasm. I have no idea who could have guided it this way.” What xAI also mentioned is that this model is a raw base from the pre-training phase of Grok-1. A lot left to do!

The Style

Coming to styles, making fun conversations with Grok does not always require a prompt. It is on “Fun Mode” by default. You need to change the interaction mode to “Regular Mode” to change its humorous personality to the normal one. What’s unreliable in its goof-off mode is its inability to generate factually correct responses. The “Regular Mode” is more suitable for accurate answers. But, as the fact goes with all the other chatbots, xAI can also generate contradictory information in this mode too.

What’s Open-source?

Open-source generally refers to code publicly available for anyone to see, modify, and distribute. Abandoning his mission of a “For-Profit Model,” Elon Musk focused on his long-held belief in collaboration with transparency this time. Still didn’t understand? xAI surprisingly released Grok-1’s codebase to the public on 17th March 2024 by sharing a GitHub Link.

The model was trained in October 2023, but Musk promised to make it open source on 11 March. Why did he do that? Remember the legal fight between Musk and OpenAI? That’s exactly why this is done. He filed a lawsuit against OpenAI, criticizing them for not sticking to their founding values for ChatGPT. Musk believes in sharing the tech with the world, and keeping transparency is the key to the betterment of humanity.

With this Open-Source Codebase, developers around the world can now fiddle with the code to suggest improvements and can also use it for new application developments. Elon Musk wants to promote an environment of collaboration. He believes that contributions from developers worldwide can shape Grok’s future.

Availability of Grok

There is a huge misunderstanding about Grok's accessibility. Developers and some interested geeks still don’t know the exact usage of Grok. Here's the clarified information:

Using Grok:

Present

Do you need help accessing Grok even after having a Premium+ subscription? Why? Let me clear it. Currently, Grok is only directly available for some with an X Premium+ subscription. It's still in its early stages. So, xAI, to be on the safer side, has limited testing purposes. Safety comes first! Musk wouldn’t take any risk after seeing what happened with users testing Copilot. Why would anyone want his project to be a laughing tool or, in any sense, a risk to humans?

Future Availability

Details on wider access for X Premium+ subscribers are not yet confirmed. There might be a separate activation process or integration within the X platform. Musk or his team have not verified this information yet.

Accessing Grok's Source Code:

Regarding sourced-code accessibility, after xAI released Grok’s codebase on 17th March 2024, anyone worldwide can access the code, although there might be some limitations.

There hasn’t been much say in how anyone proposes changes. However, not all proposed changes will automatically be integrated into the main codebase. There could be a review process to ensure the modifications are beneficial and maintain Grok’s integrity. xAI has shared a link, https://github.com/xai-org/grok-1, where developers can follow the instructions to use the model.

So, while the code is open for anyone to view and modify, there might be steps involved in officially incorporating those changes into Grok.

Model Specifications:

xAI has also explained its model specifications to make it easy for the developers to explore, modify, and learn:

- Parameters: 314B

- Architecture: Mixture of 8 Experts (MoE)

- Experts Utilization: 2 experts per token

- Layers: 64

- Attention Heads: 48 for queries, 8 for keys/values

- Embedding Size: 6,144

- Tokenization: SentencePiece tokenizer with 131,072 tokens

Additional Features:

- Rotary embeddings (RoPE)

- Supports activation sharding and 8-bit quantization

- Maximum Sequence Length (context): 8,192 tokens

Why Grok? Understanding Elon Musk's Vision

A science fiction novel, “Stranger in a Strange Land” by Robert A. Heinlein, 1961 first used the term ‘Grok’ which means “To convey a profound and intuitive understanding of something.” Musk, being a Geek of Geeks, envisions Grok as a tool for good, empowering individuals and fostering innovation. Here's how Grok can be a game-changer:

Democratizing AI: Grok's open-source nature allows anyone to dabble, make amends, and tailor the AI. This fosters a more inclusive AI landscape where developers from all backgrounds can contribute. No discrimination!

Unleashing Potential: Grok's ability to handle complex questions and stay updated with current events makes it a valuable research tool. Grok can fuel exploration and discovery across various fields, from scientists to students.

Beyond Simple Answers: Unlike traditional chatbots with scripted responses, Grok aims for deeper understanding. It can engage in conversations, suggest new avenues of thought, and even challenge biases.

What Makes Grok Different?

The open-source aspect is a major differentiator. Grok isn't locked away in a corporate vault. Developers can probe deeper into its code, understand its inner workings, and contribute to its evolution. This fosters trust and allows the community to address potential biases or limitations. Additionally, Grok's focus on comprehensive knowledge and thought-provoking responses differentiates it from chatbots’ purely transactional approach.

Concerning general thought - Is xAI open-sourced code dangerous to humanity?

People are already afraid of Artificial General Intelligence. Now, this bomb AI Grok is a shock and a surprise! Well, being very specific, the open-sourcing of Grok's codebase isn't inherently dangerous to humanity, but it does introduce some potential risks. But is there anything in the world with no risk? Here's a breakdown of both sides:

Benefits of Grok's Open-Sourced Basecode

Considering the idea of the general public and how this open-sourced base code can help unleash the true potential of AI in present and future communities, the following are the points that can make a huge difference:

Faster Innovation: With a global community able to calibrate and contribute, Grok's development can be unimaginable. Developers can identify areas for improvement, propose new features, and create specialized applications based on Grok's core functionalities. This collaborative approach can lead to faster advancements in AI capabilities, and some may be unexpected.

Transparency and Trust: By opening the code, xAI fosters trust and allows for greater audits. Developers can examine how Grok works, understand its potential biases, and even suggest algorithm improvements. Imagine chatbots fighting their own Black Box nature.

Democratization of AI: Anyone with the skills can participate in Grok's development. This opens doors for developers from all backgrounds to contribute to the field of AI. Smaller companies and individuals who wouldn't have the resources to build a large language model from scratch can now leverage Grok's foundation for their own projects. Musk truly thinks on a larger scale.

Security Enhancements: A larger community can more efficiently identify and address security vulnerabilities. With more eyes on the code, potential security risks will likely be caught and patched quickly.

Drawbacks of Grok's Open-Sourced Basecode

While there are clear benefits, there are also some potential drawbacks. Look what and how:

Unintended Consequences: With an open-source model, there's always the risk of well-meaning changes having unintended consequences. New techs are especially vulnerable to the malocchio - jealous rivals might try to curse their performance. Even little modifications could introduce bugs or disrupt functionalities. Careful code review processes become crucial.

Uneven Quality Control: While the innovation potential is high, there's also the possibility of unqualified contributions to the code. Even malicious code can be introduced. Having a clear system for evaluating and integrating changes is essential.

Fragmentation: With a large community contributing, there's a risk of the codebase needing to be more cohesive, with different developers working on incompatible versions. Maintaining a core set of guidelines and a central repository for code becomes crucial. Thanks to GitHub, the team can always restore the original version.

Loss of Control: xAI might lose some control over the direction of Grok's development. The open-source nature allows the community to shape the project in ways that might not align with xAI's original vision.

Malicious Use: In the wrong hands, Grok's capabilities could be exploited for misinformation campaigns, social manipulation, or even cyberattacks.

The open-sourcing of Grok's codebase is a bold move with the potential to accelerate advancements in AI significantly. However, careful management and a strong development community will be crucial to ensure its success and mitigate any potential drawbacks. Here are some factors that can help mitigate these risks:

Strong Code Review: A robust process for reviewing and integrating code changes is essential to ensuring Grok’s safety and integrity.

Community Guidelines: Establishing clear guidelines for contributions can help steer development towards ethical and responsible applications.

Transparency and Education: Promoting transparency about AI capabilities and limitations is crucial to fostering responsible use.

Overall, Grok’s open-source nature is a double-edged sword. While it carries some risks, it also offers significant benefits for innovation and ethical development. The key lies in responsible management, a strong developer community, and ongoing efforts to ensure Grok remains a force for good.

How is Grok trained on 314 billion 314-billion-parameter large language model?

As explained in a blog by xAI, Grok is trained on a 314 billion parameter large language model by xAI. It is likely trained using a combination of techniques. Well, the team has not clearly indicated its training modules. Here’s a breakdown of some possible and assumed methods:

Massive Text Dataset: Grok is likely trained on a massive dataset of text and code. This data could include books, articles, code repositories, websites, and other forms of text content. The sheer volume of data helps Grok learn the nuances of language and develop their ability to understand and respond to complex prompts.

Unsupervised Learning: This is a common training method for large language models. Grok might be exposed to vast amounts of text data without any specific labels or instructions. By analyzing the patterns and relationships within the data, Grok learns to identify statistical relationships between words and concepts, allowing it to generate human-quality text and understand complex questions.

Transformer Architecture: Many large language models, including Grok, are built on the Transformer architecture, a neural network specifically designed for processing sequential data like text. This architecture allows Grok to analyze long text sequences and understand the relationships between words, even across long distances in a sentence.

Here are some additional details to consider:

Specific Training Techniques: While the exact details of Grok's training are likely proprietary, xAI might have used specific techniques to enhance its capabilities. This could include pre-training specific tasks or fine-tuning the model on focused datasets depending on the desired outcome (e.g., factual accuracy, creative writing).

Mixture-of-Experts Model (MoE): There have been reports that Grok is a Mixture-of-Experts model. This means it might have multiple sub-models, each specializing in a particular aspect of language processing. This approach can improve efficiency and performance compared to a single, monolithic model.

25% Active Weights: Another interesting detail is that only 25% of Grok's parameters might be active when processing a token (unit of text). This suggests a technique for optimizing resource usage and improving the model's ability to adapt to different contexts.

Is Grok trained on X’s dataset?

There are strong indications that Grok's training data includes information from the social media platform X. But xAI officials haven’t officially confirmed the exact details as of March 19, 2024. Here's a breakdown of the evidence and some uncertainties:

Evidence for X Data:

Company Statements: xAI has emphasized Grok's "real-time knowledge of the world" and its advantage over models with limited datasets. Suggests access to a constantly updated source like social media. What could be the best social media platform for this other than their own X (previously known as Twitter)?

Focus on Recent Events: Grok reportedly shows a strong understanding of current events, which aligns well with the real-time nature of social media data.

Industry Practices: It's becoming common for large language models to be trained on social media data due to its vastness and variety.

License: xAI has already mentioned that the model wasn’t fine-tuned for any particular application. Sparing the specific details, the company explained that Grok-1 was trained on a “custom” stack. Considering the license, it is Apache License 2.0, which permits commercial use cases. So, it is an indirect signal to X’s corpus.

Uncertainties and Considerations:

Privacy Concerns: There might be limitations on how much user data from X can be used for training Grok, especially private messages or content with privacy settings. There are no official comments on this. So, we never know.

Focus on Public Content: Training might focus primarily on publicly available posts and comments on X, avoiding private information. Obviously, data protection is always a priority.

Data Filtering: xAI might implement filtering processes to remove irrelevant or harmful content before using it to train Grok. We will learn that after the official launch of Grok.

An End to the beginning!

With its codebase open to the public, Grok's future is a collaborative effort. Developers can build upon its foundation, creating specialized applications or improving its capabilities. This community-driven approach holds immense potential for advancements in AI research and real-world applications.

Grok represents a significant step towards open and accessible AI. It's a testament to Elon Musk's vision of a future where AI isn't a black box technology controlled by a select few but a collaborative tool that empowers everyone. As Grok evolves with the contributions of the global developer community, its potential to revolutionize various aspects of our lives is truly exciting.

Author:

By day, Naina Veerwani wrangles tech trends and leads the charge as CXO at Maxcode IT Solutions Pvt. Ltd with her eight years of industry experience. By night (or whenever inspiration strikes!), she transforms into a content-crafting ninja, crafting insightful blog posts to feed your tech knowledge appetite. When she's not wielding words, you can find her obsessing over the latest tech breakthroughs and social media trends or sometimes over tasty food.